The Public Voice: AI Universal Guidelines

The Public Voice: AI Universal Guidelines

We propose these Universal Guidelines to inform and improve the design and use of AI. The Guidelines are intended to maximize the benefits of AI, to minimize the risk, and to ensure the protection of human rights. These Guidelines should be incorporated into ethical standards, adopted in national law and international agreements, and built into the design of systems. We state clearly that the primary responsibility for AI systems must reside with those institutions that fund, develop, and deploy these systems.

Smart Dubai: AI PRINCIPLES AND ETHICS

AI’s rapid advancement and innovation potential across a range of fields is incredibly exciting. Yet a thorough and open discussion around AI ethics, and the principles organisations using this technology must consider, is urgently needed.

Dubai’s Ethical AI Toolkit has been created to provide practical help across a city ecosystem. It supports industry, academia and individuals in understanding how AI systems can be used responsibly. It consists of principles and guidelines, and a self-assessment tool for developers to assess their platforms.

Our aim is to offer unified guidance that is continuously improved in collaboration with our communities. The eventual goal is to reach widespread agreement and adoption of commonly-agreed policies to inform the ethical use of AI not just in Dubai but around the world.

IBM’s AI Fairness 360 Open Source Toolkit

IBM’s AI Fairness 360 Open Source Toolkit

This extensible open source toolkit can help you examine, report, and mitigate discrimination and bias in machine learning models throughout the AI application lifecycle. Containing over 30 fairness metrics and 9 state-of-the-art bias mitigation algorithms developed by the research community, it is designed to translate algorithmic research from the lab into the actual practice of domains as wide-ranging as finance, human capital management, healthcare, and education. We invite you to use it and improve it.

Markkula Center for Applied Ethics

The mission of the Ethics Center is to engage individuals and organizations in making choices that respect and care for others.

Vision

- We will be one of, if not the major ethics educator in the world.

- We will double down on forming the ethical character of the next generation, making our materials available to an increasing number of colleges and universities, increasing our resources on character education for K-12 public and faith-based schools.

- We will be a very prominent and trusted commentator and interpreter of ethical developments in society and its institutions, providing thoughtful analysis of the ethics of these breaking issues to an ever-widening set of traditional and new media platforms.

- We will expand our capacity to respond quickly and do in-depth ethical analysis of unexpected and truly new social and technological problems which arise.

Berkman Klein Center Digital Literacy Resource Platform

The Digital Literacy Resource Platform (DLRP) is an evolving collection of learning experiences, visualizations, and other educational resources (collectively referred to as “tools”) designed and maintained by the Youth and Media team at the Berkman Klein Center for Internet & Society at Harvard University. You can you use the DLRP to learn about different areas of youth’s (ages 11-18) digitally connected life.

Algo:Aware

The AlgoAware study was procured by the European Commission to support its analysis of the opportunities and challenges emerging where algorithmic decisions have a significant bearing on citizens, where they produce societal or economic effects which need public attention.

The Montreal Declaration for a Responsible Development of Artificial Intelligence: a participatory process

The Montreal Declaration for a Responsible Development of Artificial Intelligence: a participatory process

The Forum on the Socially Responsible Development of Artificial Intelligence, held in Montreal on November 2 and 3, 2017, concluded with the unveiling of the preamble of a draft declaration to which the public is now invited to contribute in a co-construction process involving all sectors of society. The Declaration sets out the unprecedented ethical challenges that the development of artificial intelligence entails in the short and long term. The principles and recommendations to which the public is invited to contribute make up a series of ethical guidelines for the development of AI.

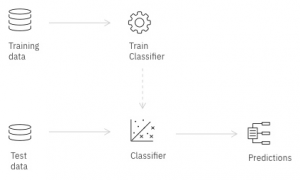

Centre for Democracy & Technology – Digital Decisions Tool

Centre for Democracy & Technology – Digital Decisions Tool

CDT is a champion of global online civil liberties and human rights, driving policy outcomes that keep the internet open, innovative, and free. The Digital Decisions Tool translates principles for fair and ethical automated decision-making into a series of questions that can be addressed during the process of designing and deploying an algorithm.

5Rights

5Rights works with children and young people helping them to articulate their needs and desires for the digital environment.

5Rights publishes and disseminates research that explores the needs of children and childhood where it intersects with the digital environment. We regularly contribute to public consultations, key notes and panels, appear on broadcast media and generate press coverage.

Council for Big Data, Ethics, and Society

In collaboration with the National Science Foundation, the Council for Big Data, Ethics, and Society was started in 2014 to provide critical social and cultural perspectives on big data initiatives. The Council brings together researchers from diverse disciplines — from anthropology and philosophy to economics and law — to address issues such as security, privacy, equality, and access in order to help guard against the repetition of known mistakes and inadequate preparation. Through public commentary, events, white papers, and direct engagement with data analytics projects, the Council will develop frameworks to help researchers, practitioners, and the public understand the social, ethical, legal, and policy issues that underpin the big data phenomenon.

The Council is directed by danah boyd, Geoffrey Bowker, Kate Crawford, and Helen Nissenbaum.

Algorithm Watch

Algorithm Watch

The more technology develops, the more complex it becomes. AlgorithmWatch believes that complexity must not mean incomprehensibility (read our ADM manifesto).

AlgorithmWatch is a non-profit initiative to evaluate and shed light on algorithmic decision making processes that have a social relevance, meaning they are used either to predict or prescribe human action or to make decisions automatically.

Committee of experts on Internet Intermediaries (MSI-NET)

Committee of experts on Internet Intermediaries (MSI-NET)

The Committee of experts on Internet Intermediaries (MSI-NET) will prepare standard setting proposals on the roles and responsibilities of Internet intermediaries. The expected results of the new sub-group will be the preparation of a draft recommendation by the Committee of Ministers on Internet intermediaries and the preparation of a study on human rights dimensions of automated data processing techniques (in particular algorithms) and possible regulatory implications.

Committee of experts on Human Rights Dimensions of automated data processing and different forms of artificial intelligence (MSI-AUT)

The MSI-AUT will prepare follow up with a view to the preparation of a possible standard setting instrument on the basis of the study on the human rights dimensions of automated data processing techniques (in particular algorithms and possible regulatory implications).

MSI-AUT will also study the development and use of new digital technologies and services, including different forms of artificial intelligence, as they may impact peoples’ enjoyment of fundamental rights and freedoms in the digital age – with a view to give guidance for future standard-setting in this field. Furthermore, MSI-AUT will study the impact of civil and administrative defamation laws and their relation to the criminal provisions on defamation, as well as jurisdictional challenges in the application of those laws in the international digital environment.

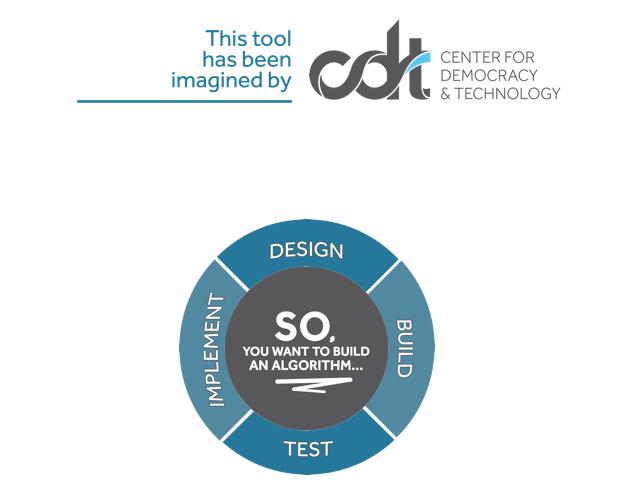

IEEE Global Initiative for Ethical Considerations in the Design of Autonomous Systems

IEEE Global Initiative for Ethical Considerations in the Design of Autonomous Systems

An incubation space for new standards and solutions, certifications and codes of conduct, and consensus building for ethical implementation of intelligent technologies

The purpose of this Initiative is to ensure every technologist is educated, trained, and empowered to prioritize ethical considerations in the design and development of autonomous and intelligent systems.

Algorithm-based recommendation and information diversity on the web

Algorithm-based recommendation and information diversity on the web

-

- how diverse is the information produced by users ?

-

- what is its socio-semantic structure, how is information

-

- distributed over actors of a given online ecosystem ?

- how diverse is information consumption?

-

- how do online platforms present, render and filter information ?

- what kind of bias is being created by the underlying algorithms and their principles ? (e.g. PageRank, NewsFeed)

facebook.tracking.exposed

facebook.tracking.exposed

Developing a tool to help increase transparency behind personalization algorithms, so that people can have more effective control of their online Facebook experience and more awareness of the information to which they are exposed.

Fairness, Accountability, and Transparency in Machine Learning

Fairness, Accountability, and Transparency in Machine Learning

The FAT/ML workshop series brings together a growing community of researchers and practitioners concerned with fairness, accountability, and transparency in machine learning.

The past few years have seen growing recognition that machine learning raises novel challenges for ensuring non-discrimination, due process, and understandability in decision-making. In particular, policymakers, regulators, and advocates have expressed fears about the potentially discriminatory impact of machine learning, with many calling for further technical research into the dangers of inadvertently encoding bias into automated decisions.

At the same time, there is increasing alarm that the complexity of machine learning may reduce the justification for consequential decisions to “the algorithm made me do it.”

The goal of our 2016 workshop is to provide researchers with a venue to explore how to characterize and address these issues with computationally rigorous methods.

This year, the workshop is co-located with two other highly related events: the Data Transparency Lab Conference and the Workshop on Data and Algorithmic Transparency.

An interesting proposal from the FAT/ML community:

Principles for Accountable Algorithms and a social Impact Statement for Algorithms

Enforce project

Enforce project

This project intends to provide answers to the protection of the intertwined personal rights of non-discrimination and privacy-preservation both from a legal and a computer science perspective. On the legal perspective, the objective consists of a systematic and critical review of the existing laws, regulations, codes of conduct and case law, and in the study and the design of quantitative measures of the notions of anonymity, privacy and discrimination that are adequate for enforcing those personal rights in ICT systems. On the computer science perspective, the objective consists of designing legally-grounded technical solutions for discovering and preventing discrimination in DSS and for preserving and enforcing privacy in LBS.

IEEE P7003 Working Group: Developing a Standard for Algorithm Bias Considerations

Scope: This standard describes specific methodologies to help users certify how they worked to address and eliminate issues of negative bias in the creation of their algorithms, where “negative bias” infers the usage of overly subjective or uniformed data sets or information known to be inconsistent with legislation concerning certain protected characteristics (such as race, gender, sexuality, etc); or with instances of bias against groups not necessarily protected explicitly by legislation, but otherwise diminishing stakeholder or user well being and for which there are good reasons to be considered inappropriate.

Possible elements include (but are not limited to): benchmarking procedures and criteria for the selection of validation data sets for bias quality control; guidelines on establishing and communicating the application boundaries for which the algorithm has been designed and validated to guard against unintended consequences arising from out-of-bound application of algorithms; suggestions for user expectation management to mitigate bias due to incorrect interpretation of systems outputs by users (e.g. correlation vs. causation)

Algorithmic justice League

Algorithmic bias like human bias can result in exclusionary experiences and discriminatory practices. The Algorithmic justice League works to Support Inclusive Technology. To do this they ask support to Highlight Bias through:

MEDIA – Help raise awareness about existing bias in coded systems

RESEARCH – Support the development of tools for checking bias in existing data and software

iRIGHTS international

Digitisation for Democracy and the Public Good

Artificial Intelligence and Law in New Zealand

A three-year project to evaluate legal and policy implications of artificial intelligence (AI) for New Zealand. The project is based at the University of Otago, and funded by the New Zealand Law Foundation.