Drawing on Responsible Research and Innovation, the project will follow a co-constructive governance approach. It will work with a wide range of stakeholders including “digital native” youths who interact with information controlling algorithms on a daily basis, their educators/guardians and online agencies, privacy and security organisations, internet interest groups.

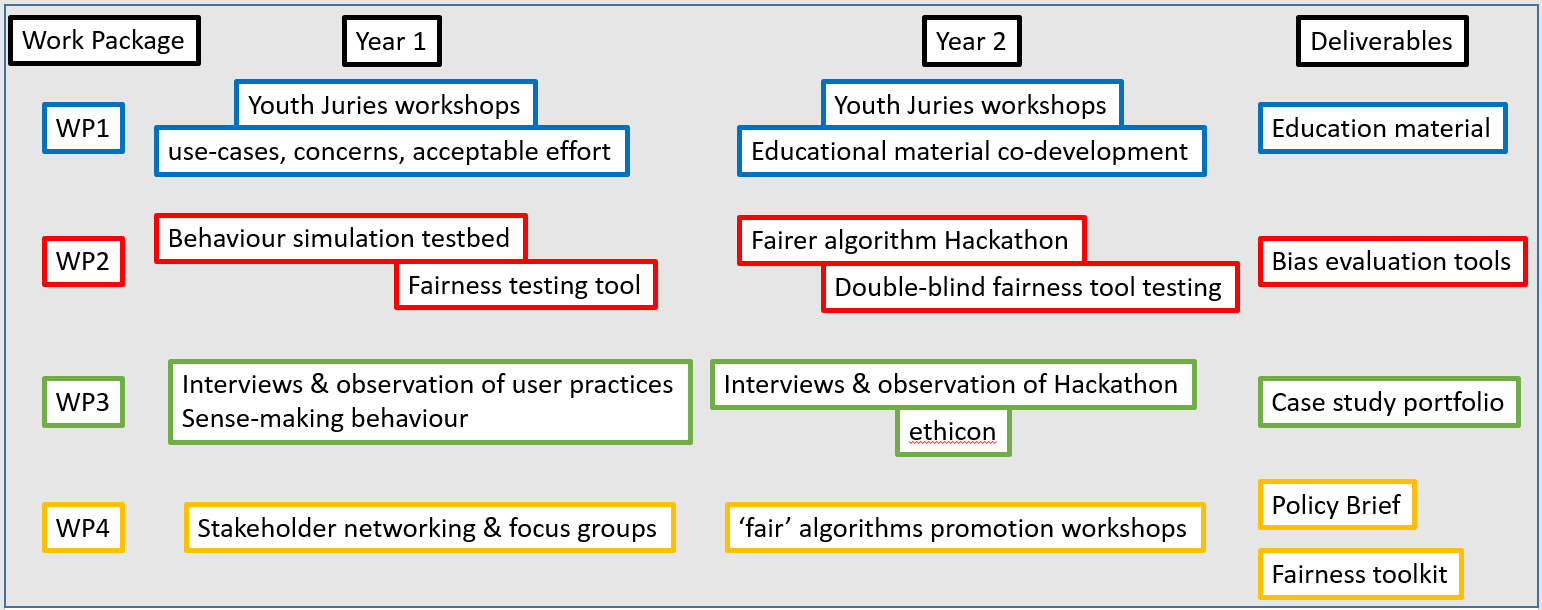

The project will consist of four interconnected Work Packages (WPs) aligned with the four action objectives:

- WP1 [led by Nottingham] will use ‘Youth Juries’ workshops with 13-17 year old “digital natives” to co-produce citizen education materials on properties of information filtering/recommendation algorithms;

- WP2 [led by Edinburgh] will use co-design workshops and Hackathons and double-blind testing to produce user-friendly open source tools for benchmarking and visualizing biases in filtering/recommendation algorithms;

- WP3 [led by Oxford] will design requirements for filtering/recommender algorithms that satisfy subjective criteria of bias avoidance based on interviews and observation of users’ sense-making behaviour when obtaining information through social media;

- WP4 [co-led by Oxford and Nottingham] will develop policy briefs for an information and education governance framework capable of responding to the demands of the changing landscape in social media usage. These will be developed through broad stakeholder focus groups that include representatives of government, industry, third-sector organizations, educators, lay-people and young people (a.k.a. “digital natives”).

Each of the four WPs will produce intermediate results that feed directly into the development of the other WPs.

In order to ensure direct ‘hands-on’ understanding of the work the other teams are doing, for each series of workshops, ‘juries’, hackathons, at least one member from each team will participate in every event.

The WPs addressed in this project are timely in addressing challenges from across the Digital Economy Programme, since algorithms are now ubiquitous intermediaries between humans and the vast quantities of data that drive the Digital Economy. In line with the ‘systems and their ecosystems’ theme, our project follows the Human Data Interaction (HDI) philosophy of placing the human at the centre of the information–access experience. The transformational power of personal data is the potential to optimize digital services to the specific needs and desires of an individual, but must be done without making the services seem creepy to users due to lack of transparency. We therefore seek to provide users with a better understanding of the core principles of information control algorithms, through educational materials and tools for testing and visualizing the response behaviour of existing systems. We also seek to support the design of future algorithms by better understanding the properties that determine the users’ subjective sense of ‘fairness’.

The work is user-led. It has been born out of the comments we observed during previous user workshops, as part of existing projects (CaSMa/iRights, SmartSociety, Digital Wildfires). The project involves stakeholder participation at all stages, through focus groups, co-design workshops, hackathons and double-blind prototype testing.