On February 3rd a group of twenty five stakeholders joined us at the Digital Catapult in London for our first discussion workshop.

The User Engagement workpackage of the project focuses on gathering together professionals from industry, academia, education, NGOs and research institutes in order to discuss societal and ethical issues surrounding the design, development and use of algorithms on the internet. We aim to create a space where these stakeholders can come together and discuss their various concerns and perspectives. This includes finding differences of opinion. For example, participants from industry often view algorithms as proprietary and commercially sensitive whereas those from NGOs frequently call for greater transparency in algorithmic design. It is important for us to draw out these kinds of varying perspectives and understand in detail the reasoning that lies behind them. Then, combined with the outcomes of the other project workpackages, we can identify points of resolution and produce outputs that seek to advance responsibility on algorithm driven internet platforms.

The Digital Catapult has great views of central London

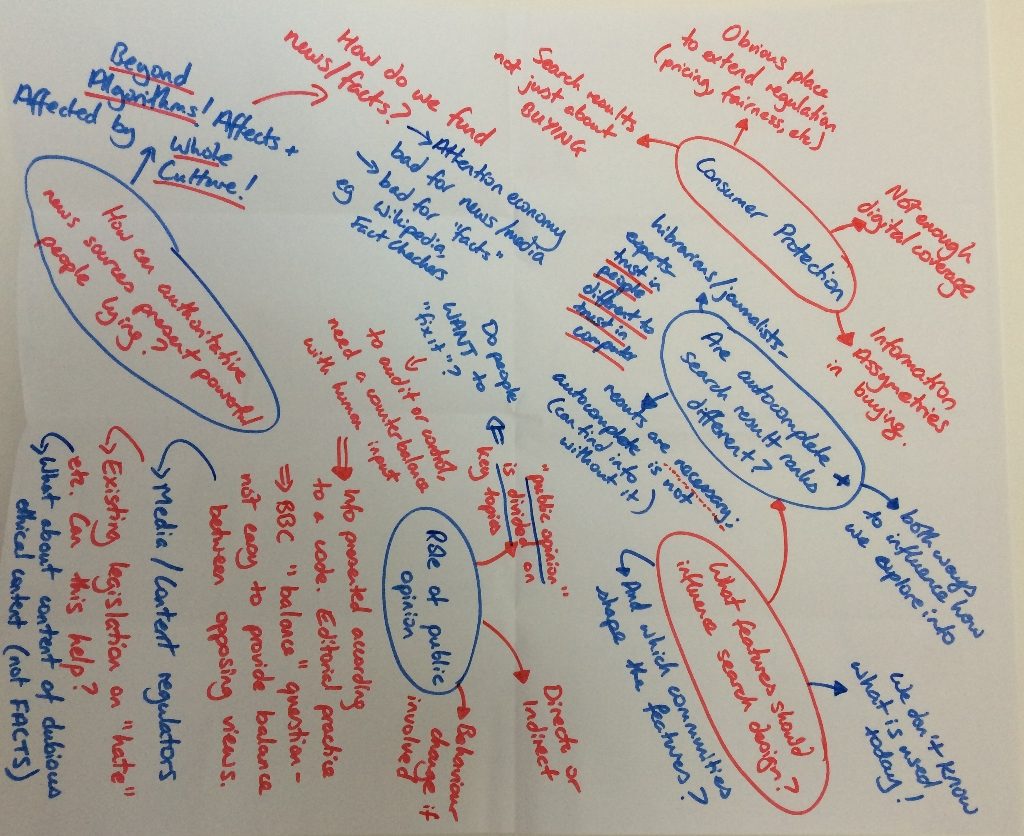

Our first workshop centred on the topic of algorithms and fairness. We were very fortunate to have the attendance of stakeholders from a diverse range of backgrounds. Surrounded by the Digital Catapult’s stunning views of central London, the room was filled with animated discussion and constructive disagreement. After a brief introduction to the UnBias project, participants separated into four groups, each group discussing a case study that highlighted a central issue around algorithm fairness such as personalisation and transparency. The groups reported back on their discussions and the floor was then opened for debate.

The stakeholders were highly engaged in the discussion and raised a number of important questions. These included: how can we engender meaningful transparency where all users can understand the operation of an algorithm and its potential outcomes? If we attempt to make the data that algorithms work on and filter more ‘fair’, do we risk (unintentionally) embedding our own biases? Possible solutions were also put forward. For instance, accreditation systems to authenticate algorithmically filtered content and small payments to allow users to exert some control over the personalisation of their accounts were both suggested.

Some of the UnBias team and our stakeholder participants at the end of the workshop

Overall, the discussions that took place across the workshop revealed numerous ethical concerns and tensions and also produced a range of interesting insights. For us, they highlighted the complexity and subjectivity that surround notions of algorithmic fairness. So far we have made some first steps – through this workshop and other project activities – to unpack what fairness means to different stakeholders but what is clear is that there is much still to be done.

One thought on “First UnBias Multi-Stakeholder Workshop”