We’ve started compiling some datasets and APIs relevant to the hackathon, which we’ll encourage the participants to use, repurpose, combine in creative ways!

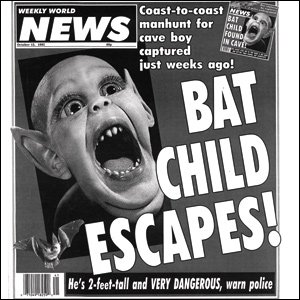

News, Fake News & Social Media

News, Fake News & Social Media

- Lists of fake news web sites

Some independently curated lists of Web sites that are considered to publish “fake news”, according to their own definitions (misleading, conspiracy theories, hyper-partisan, etc.)

opensources

marktron/fakenews

Buzzfeed’s “top 50 fake news hits on facebook” data

A blacklist of mainly Italian sites - News popularity datasets

Datasets reporting the number of social media shares for a list of news articles

UCI Dataset 1

Dataset 2 (University of Porto) and associated paper - News popularity API

www.crowdtangle.com

Online Rating systems

Online Rating systems

Online rating and recommender systems have a general problem that “the rich get richer”, and many reviews are also known to be “fake”.

- Yelp Academic dataset

https://www.yelp.com/dataset

- Last.fm dataset

http://www.dtic.upf.edu/~ocelma/MusicRecommendationDataset/ - Epinions dataset

https://projet.liris.cnrs.fr/red/

(Un-)Ethical online user tracking and data collection

- Princeton Web census

Reports on tracking, fingerprinting, and other ethically questionable practices by the top 1M web sites (data available).

https://webtransparency.cs.princeton.edu/webcensus/ - Sociam/Oxford data on mobile apps permissions + connecting to sketchy servers.

[coming soon]

Language related

Language related

- Wikipedia toxic comments dataset :

A dataset of comments from Wikipedia talk pages, with crowdsourced annotations flagging toxic comments and personal attacks.

Dataset of figshare.com - Conversation AI’s Perspective API:

An API giving ML-assessments of toxicity for natural language comments, built by the Conversation AI project (associated with Google)

https://www.perspectiveapi.com

An analysis of the associated biases (reflecting social biases against groups, e.g. women, muslims, LGBT, etc.):

Blog post on Medium

Technical presentation from AIES conference 2018 - Biased and de-biased Word Embeddings:

Word embeddings (vector representations of words, capturing their semantics) learnt from a large corpus incorporate the social biases expressed in the corpus.

word2vec tool for learning “raw” word embeddings.

Tutorial to remove gender bias from such word embeddings (with dataset of de-biased word embeddings):

https://github.com/tolga-b/debiaswe - African-American English (AAE) tweets

http://slanglab.cs.umass.edu/TwitterAAE/

AAE is a dialect of English used predominantly by blacks, with roots in African languages. It is often not recognized as English (particular when combined with abbreviations in short tweets) by standard NLP tools, and is associated with social biases related to education and poverty.

Police and justice related

Police and justice related

- New York City stop-and frisk data

The NYPD had a controversial “stop and search” policy for many years, where officers could stop people on the street on suspicion of criminal activity, and “frisk” them. These stops were found to disproportionately affect black and hispanic people, and later deemed illegal. Each stop (and the outcome) were recorded, in a dataset which is now available:

https://www.nyclu.org/en/stop-and-Frisk-data - Compas dataset

The infamous compas tool, which aimed to predict how likely a convicted criminal was to re-offend, and found to be biased against blacks, in a well-known pro-publica study. The dataset and study are available at:

https://github.com/propublica/compas-analysis - Feedback loops in predictive policing tools

[coming soon]

Other

- German credit dataset

https://archive.ics.uci.edu/ml/datasets/Statlog+%28German+Credit+Data%29 - Chilean university selection exam

[must request it from data owners] - Diversity.ai project:

Evaluating race and sex diversity in the world’s largest companies using deep neural networks.

=> Obtained pictures of the executives of 500 largest companies from web site (acknowledge some risk of errors) and used DNN tools to predict gender, age, race.

Compared with statistical (census) data from relevant countries.

Data available at: https://drive.google.com/drive/folders/0B91aiG5t1MdCNkx3NGg3U3QzakE