Many multi-user scenarios are characterised by a combinatorial nature, i.e., an algorithm can take meaningful decisions for the users only if all their requirements and preferences are considered at the same time to select a solution from a huge potential space of possible system decisions. Sharing economy application, where users aim to find peers to form teams with in order to accomplish a task, and situations in which a limited number of potentially different resources, e.g. hotel rooms, must be distributed to users who have preferences over them are examples of such scenarios.

To get a glimpse of the problems that can arise if an algorithm does not consider the requirements of all the users at the same time, consider the following example. Assume there are three restaurants, one that serves only vegetarian meals, one only fish, and one meat, and that each of these restaurant has only one table available. Moreover, assume that there are three families; the first family is vegetarian; the second family does not eat meat and would prefer a vegetarian meal or a fish dish; and the third family has no food restriction but they would prefer to eat fish over vegetarian meals over meat. A platform that has knowledge about restaurants’ characteristics and availability, and families’ preferences and restrictions has to suggest a restaurant to each family. Note that the goal of the platform is to lead each family to a restaurant where they will be able to eat. If the platform uses an algorithm that provides a recommendation to the family by considering only their individual preferences, then it will suggest the vegetarian restaurant to both family one and two, even if there is only one table available and, thus, one family won’t be able to have dinner. If,

instead, all the preferences are consider at the same time, the platform can suggest the vegetarian restaurant to the first family, the fish one at the second family, and the one specialised in meat to the third family.

Generally, this type of scenario is characterised by a collective of users with conflicting preferences and constraints that limit the feasible and desirable solutions an algorithm can propose to its users. Since it is unrealistic to expect that different algorithms that satisfy the priorities of each user can be used simultaneously on the same platform, we face the problem of selecting an algorithm that satisfies certain fairness criteria acceptable to all users, even when each of them, individually, has conflicting ideas about what the right criteria should be.

To explore this problem we have recently conducted a small-scale pilot experiment based on a simple scenario the participants were familiar with. Specifically, we considered the problem of allocating coursework (essay) topics to students in a university course, where each student must have exactly one coursework topic assigned to them, but no topic can be assigned to more than one student. Each student expresses her preferences over topics provided by the course lecturer by assigning each of them a score representing how happy she would be if the topic were assigned to her. Scores range from 1 to 7, and are labelled with qualitative descriptions ranging from ”very unhappy” (=1) to ”very happy” (=7), with in-between values for ”slightly” (un)happy, and the midpoint of the scale (4) corresponding to ”indifferent”.

Consider the simple example in which two students assign 7 to the same topic and 1 to all the other topics. Obviously, it is not possible to consider the two students separately, so we need a definition of fairness for the collective of users to determine what they could agree would be the “fair” thing for an algorithm to do in such situations. The experiment was intended to explore the problems related to identifying a collectively acceptable definition of fairness, but also to observe how transparency of the values embedded in an algorithm affect users’ judgement of the fairness of these algorithms. The experiment participants were nine computer science students at the University of Edinburgh.

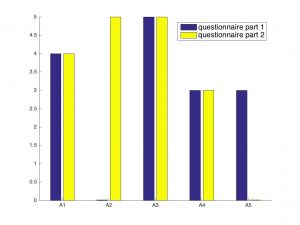

In order to investigate the problems related to identifying a commonly acceptable definition of fairness, we used five different algorithms to allocate coursework topics to students, showed students the results, and asked them to choose their most preferred and least preferred algorithm. At first, we asked this question without providing the students with any information about the nature of the algorithms, but in a second step, we provided an informal description of the algorithms and asked the same question.

We observed that, even if the set of students is small, it is not possible to identify a unique mechanism that is considered most or least fair by all students. Indeed, four out of the five suggested algorithms were selected as the most and least preferred ones by at least one student.

Moreover, we observed some drastic changes in students’ choices before and after explaining how the algorithms work. For example, the only algorithm that was not selected as “most preferred’’ when students were not aware of its details, becomes (one) of the preferred ones for five out of nine students when the description of the algorithm is provided.

These results clearly support the need to investigate more how fairness can be achieved in (collective) combinatorial scenarios, and how meaningful transparency can be achieved, especially when algorithms are used by people with different backgrounds.

One thought on “How hard is to be fair in multi-user combinatorial scenarios?”