An important topic considered this year at the International Conference on Neural Information Processing Systems (NIPS), one of the prime outlets for machine learning and Artificial Intelligence research in the world, is the connection between machine learning, law and ethics. In particular, a paper presented by Moritz Hardt, Eric Price, and Nathan Srebro focused on Equality of Opportunity in Supervised Learning.

Supervised Learning is one of the main techniques used in machine learning to predict future events based on previous data for which a set of features that characterizes each entry and the realized events are known. This previous data is called training data because it is used by the learning algorithm to infer correlation between inputs (features of the data) and outputs (events). For example, a data set containing information about movies that have been watched by given users (i.e., the events) can be used to predict which movies a new user may like. In this case, the set of features the learning algorithm uses can be related to both users (e.g., age and gender) and movies (e.g., category and director), and the events in the dataset are the movies actually watched.

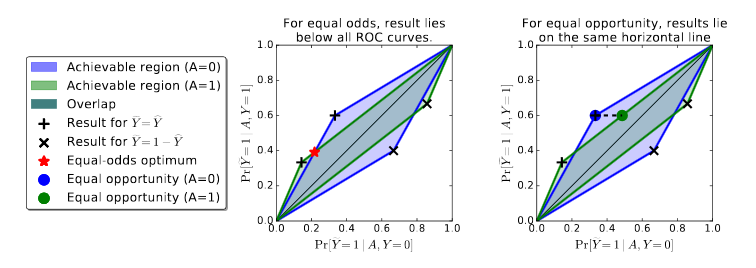

As pointed out in a previous post and explained in this article (https://research.google.com/bigpicture/attacking-discrimination-in-ml/), the use of these techniques to predict future events is sometimes controversial because of their potential to introduce discrimination.

Moritz Hardt explains that his, and Price’s, and Srebro’s work aims to tackle this problem in supervised learning by optimally adjusting any learned predictor such that it does not discriminate on the basis of protected features.

Their results significantly advance the state of the art in “fair” machine learning, however they are not likely to be suitable for every possible setting because the tests used to identify discriminatory predictions are based on a “black box” model. Essentially, the work proposed is based only on statistical distributions without considering the specific features of the considered scenario, evaluating the form of the predictor that produces the outcome, and exploring how this predictor function has been derived. As a consequence, given two different scenarios, if the algorithm computes the same scores for both of them, then what is judged to be unfair in one scenario is also judged to be unfair in the other.

Due to the black box aspect of these techniques, users have no way to (partially) control or affect the decision of the algorithm other than manipulate the input data (if possible). Moreover, it is often difficult (if not impossible) to explain why and depending on which criteria the learning algorithm predicts the outcomes.

Crucially, transparency, control over the personal data, and the ability to directly affect algorithmic decisions have been identified as fundamental characteristics the next generation of algorithms should have. This make us wonder what the role of machine learning will be in this context, and how we can design new mechanisms that are not affected by the black box characteristics of current techniques.

To overcome the black box problem, as part of the UnBias project, our first aim is to provide a set of tools that expose possible biases to users. Then, by observing how users interact with these tools, what they think about them, and the type of problems they highlight, we aim to identify which fairness criteria are the important ones for users, what guarantees algorithms can give regarding these criteria, and what levels of transparency users are most comfortable with.

One thought on “Fair machine learning techniques and the problem of transparency”