A recent report from the BBC covers one instance of the ever-growing use of algorithms for social purposes and helps us to illustrate some key ethical concerns underpinning the UnBias project.

A recent report from the BBC covers one instance of the ever-growing use of algorithms for social purposes and helps us to illustrate some key ethical concerns underpinning the UnBias project.

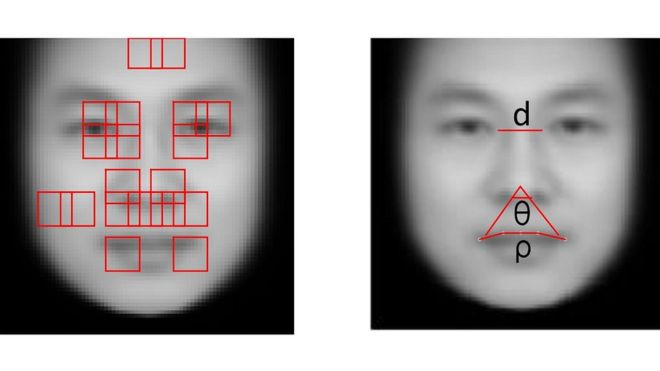

As reported by the BBC http://www.bbc.co.uk/news/technology-38092196, researchers in China have undertaken research to develop and assess a convict-spotting algorithm. The research investigates whether algorithms can correctly identify criminals based on their facial features. According to the BBC, the researchers reported that their algorithm was able to correctly identify criminals 89% of the time. The algorithm was developed using just under 2,000 identification photographs of Chinese males aged 18 to 55 with criminal convictions. It functions by identifying patterns in the facial features of those men – for instance the distance between eyes or the curvature of the upper lip. Though presented as a positive development for the justice system, it is worrying that researchers felt that it may be possible to correlate physical characteristics with propensity to commit crime and then automate this connection. We only have to look to recent events or some of the darkest periods in history to see that decision-making systems based on filtering by certain characteristics can have highly negative implications for groups, communities and entire societies.

This particular instance of algorithmic development raises similar questions to those we are asking in the UnBias project regarding user experiences of algorithm-driven internet platforms. If we automate decision-making, then who is accountable for those decisions? What if algorithms enable or are used as a rationale to justify discrimination? Whose values are embedded into algorithm design and what – intentional or unintentional – consequences might they have?

A central question is: how do we responsibly develop algorithms? A responsible approach recognises that algorithms may have both positive and negative implications. It further recognises that understanding these potential implications helps us to consider how harms (such as discriminatory values or bias becoming embedded into automated decision-making) can be mitigated or even prevented. In some circumstances a responsible approach may encourage researchers to consider whether it is desirable to even attempt to develop algorithms for certain kinds of purposes. In order to make these kind of decisions a responsible approach to algorithm design requires input from relevant stakeholders of all kinds.

One thought on “The need for a responsible approach to the development of algorithms”