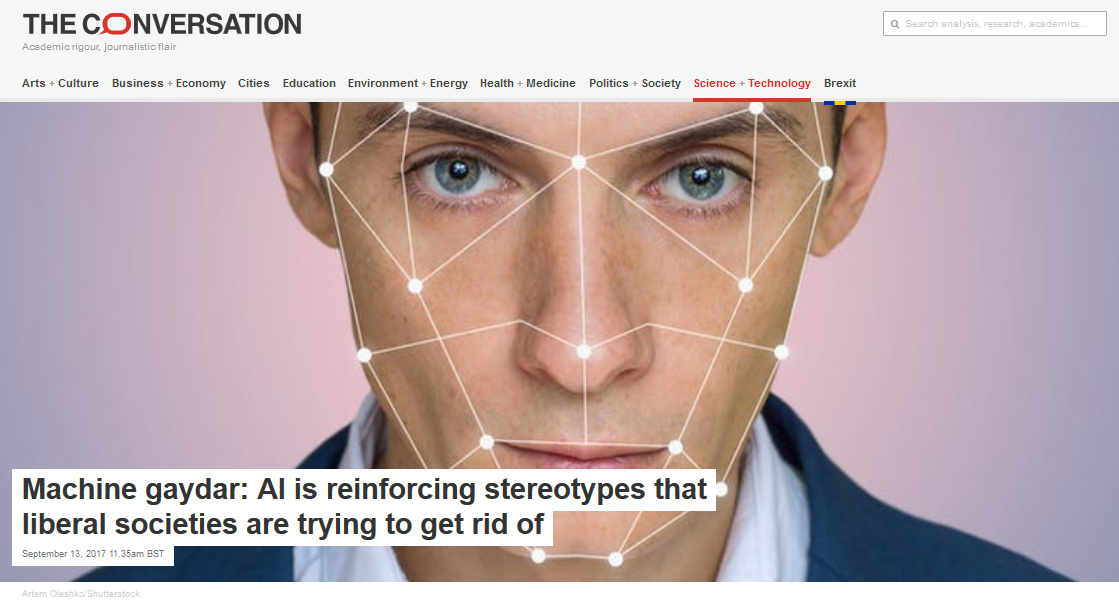

On September 7th the Guardian published an article drawing attention to a study from Stanford University which had applied Deep Neural Networks (a form of machine learning AI) to test if they could distinguish peoples’ sexual orientation from facial images. After reading both the original study and the Guardian’s report about it, there were so many problematic aspects about the study that I immediately had to write a response, which was published in the Conversation on September 13th under the title “Machine gaydar: AI is reinforcing stereotypes that liberal societies are trying to get rid of“.

This title was chosen by the editor at the Conversation. My original proposal for the title was “AI society, a reduction to simplistic binaries?” to try and highlight the more general concerns that the push towards algorithmic categorization of people is accompanied by a tendency towards simplistic reduction of complex humans into discrete socio-psychological classes. The study that had triggered this was merely a headline grabbing example of this trend, in this case reducing human sexuality to a gay/straight binary as part of their sexual orientation classification from facial images.

Two subsequent articles in the Conversation delved deeper into the specifics around the issues of misconception (Using AI to determine queer sexuality is misconceived and dangerous) and stereotypes (Gay-identifying AI tells us more about stereotypes than the origins of sexuality) about sexuality.

One thought on “In the Conversation: “Machine gaydar: AI is reinforcing stereotypes that liberal societies are trying to get rid of””