This slideshow requires JavaScript.

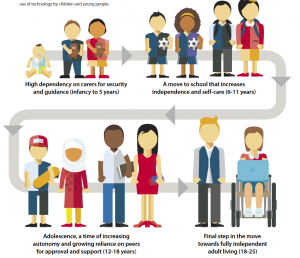

The Fairness Toolkit has been developed for UnBias by Giles Lane and his team at Proboscis, with the input of young people and stakeholders. It is one of our project outputs aiming to promote awareness and stimulate a public civic dialogue about how algorithms shape online experiences and to reflect on possible changes to address issues of online unfairness. The tools are not just for critical thinking, but for civic thinking – supporting a more collective approach to imagining the future as a contrast to the individual atomising effect that such technologies often cause.

The toolkit contains the following elements:

1. Handbook

2. Awareness Cards

3. TrustScape

4. MetaMap

5. Value Perception Worksheets

All components of Toolkit are freely available to download and print from our site under Creative Commons license (CC BY-NC-SA 4.0).

Demonstrations of the toolkit will be given at the V&A Digital Design weekend, London September 22nd.

More information is available on the Fairness Toolkit, and Trustscapes pages.

Continue reading UnBias Fairness Toolkit →