This slideshow requires JavaScript.

The Fairness Toolkit has been developed for UnBias by Giles Lane and his team at Proboscis, with the input of young people and stakeholders. It is one of our project outputs aiming to promote awareness and stimulate a public civic dialogue about how algorithms shape online experiences and to reflect on possible changes to address issues of online unfairness. The tools are not just for critical thinking, but for civic thinking – supporting a more collective approach to imagining the future as a contrast to the individual atomising effect that such technologies often cause.

The toolkit contains the following elements:

1. Handbook

2. Awareness Cards

3. TrustScape

4. MetaMap

5. Value Perception Worksheets

All components of Toolkit are freely available to download and print from our site under Creative Commons license (CC BY-NC-SA 4.0).

Demonstrations of the toolkit will be given at the V&A Digital Design weekend, London September 22nd.

More information is available on the Fairness Toolkit, and Trustscapes pages.

Continue reading UnBias Fairness Toolkit →

In the spirit of recent events surrounding the revelations about Cambridge Analytica and the breaches of trust regarding Facebook and personal data, ISOC UK and the Horizon Digital Economy Research institute held a panel discussion on “Multi Sided Trust for Multi Sided Platforms“. The panel brought together representatives from different sectors to discuss the topic of trust on the Internet, focusing on consumer to business trust; how users trust online services that are offered to them. Such services include, but are not limited to, online shopping, social media, online banking and search engines.

Continue reading ISOC UK / Horizon DER panel for Multi Sided Trust on Multi Sided Platforms →

AMOIA (Algorithm Mediated Online Information Access) – user trust, transparency, control and responsibility

This Web Science 2017 workshop, delivered by the UnBias project, will be an interactive audience discussion on the role of algorithms in mediating access to information online and issues of trust, transparency, control and responsibility this raises.

The workshop will consist of two parts. The first half will feature talks from the UnBias project and related work by invited speakers. The talks by the UnBias team will contrast the concerns and recommendations that were raised by teen-aged ‘digital natives’ in our Youth Juries deliberations and user observation studies with the perspectives and suggestions from our stakeholder engagement discussions with industry, regulators and civil-society organizations. The second half will be an interactive discussion with the workshop participants based on case studies. Key questions and outcomes from this discussion will be put online for WebSci’17 conference participants to refer to and discuss/comment on during the rest of the conference.

The case studies we will focus on:

- Case Study 1: The role of recommender algorithms in hoaxes and fake news on the Web

- Case Study 2: Business models that share AMOIA, how can web-science boost Corporate Social Responsibility / Responsible Research and Innovation

- Case Study 3: Unintended algorithmic discrimination on the web – routes towards detection and prevention

The UnBias project investigates the user experience of algorithm driven services and the processes of algorithm design. We focus on the interest of a wide range of stakeholders and carry out activities that 1) support user understanding about algorithm mediated information environments, 2) raise awareness among providers of ‘smart’ systems about the concerns and rights of users, and 3) generate debate about the ‘fair’ operation of algorithms in modern life. This EPSRC funded project will provide policy recommendations, ethical guidelines and a ‘fairness toolkit’ that will be co-produced with stakeholders.

The workshop will be a half-day event

Programme

9:00 – 9:10 Introduction

9:10 – 9:30 Observations from the Youth Juries deliberations with young people, by Elvira Perez (University of Nottingham)

9:30 – 9:50 Insights from user observation studies, by Helena Webb (University of Oxford)

9:50 – 10:10 Insights from discussions with industry, regulator and civil-society stakeholders, by Ansgar Koene (University of Nottingham)

10:10 – 10:30 “Platforms: Do we trust them”, by Rene Arnold

10:30 – 10:50 “IEEE Global Initiative for Ethical Considerations in Artificial Intelligence and Autonomous Systems”, by John Havens

10:50 – 11:10 Break

11:10 – 11:50 Discussion of case study 1

11:50 – 12:30 Discussion of case study 2

12:30 – 12:50 Break

12:50 – 13:30 Discussion of case study 3

13:30 – 14:00 Summary of outcomes

Key dates

Workshop registration deadline: 18 June 2017

Workshop date: 25 June 2017

Conference dates: 26-28 June 2017

We are please to announce that the report summarizing the outcomes of the first UnBias project stakeholder engagement workshop is now available for public dissemination.

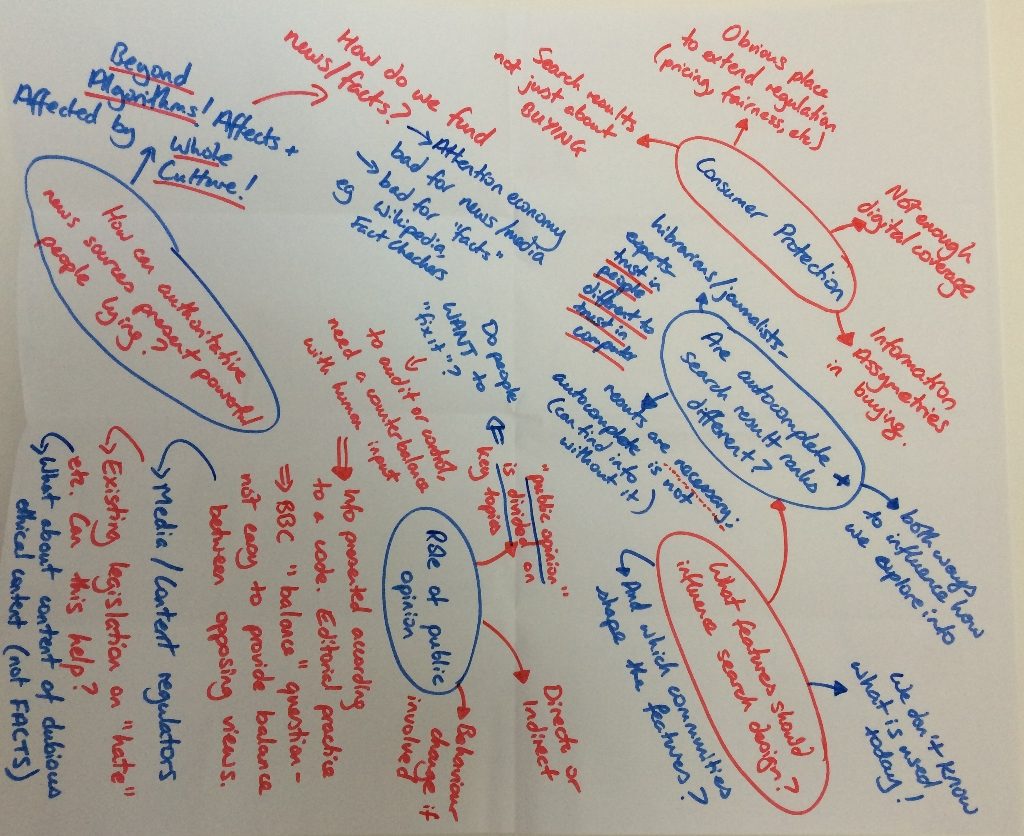

The workshop took place on February 3rd 2017 at the Digital Catapult centre in London, UK. It brought together participants from academia, education, NGOs and enterprises to discuss fairness in relation to algorithmic practice and design. At the heart of the discussion were four case studies highlighting fake news, personalisation, gaming the system, and transparency.

Continue reading Publication of 1st WP4 workshop report →

Many multi-user scenarios are characterised by a combinatorial nature, i.e., an algorithm can take meaningful decisions for the users only if all their requirements and preferences are considered at the same time to select a solution from a huge potential space of possible system decisions. Sharing economy application, where users aim to find peers to form teams with in order to accomplish a task, and situations in which a limited number of potentially different resources, e.g. hotel rooms, must be distributed to users who have preferences over them are examples of such scenarios.

Continue reading How hard is to be fair in multi-user combinatorial scenarios? →

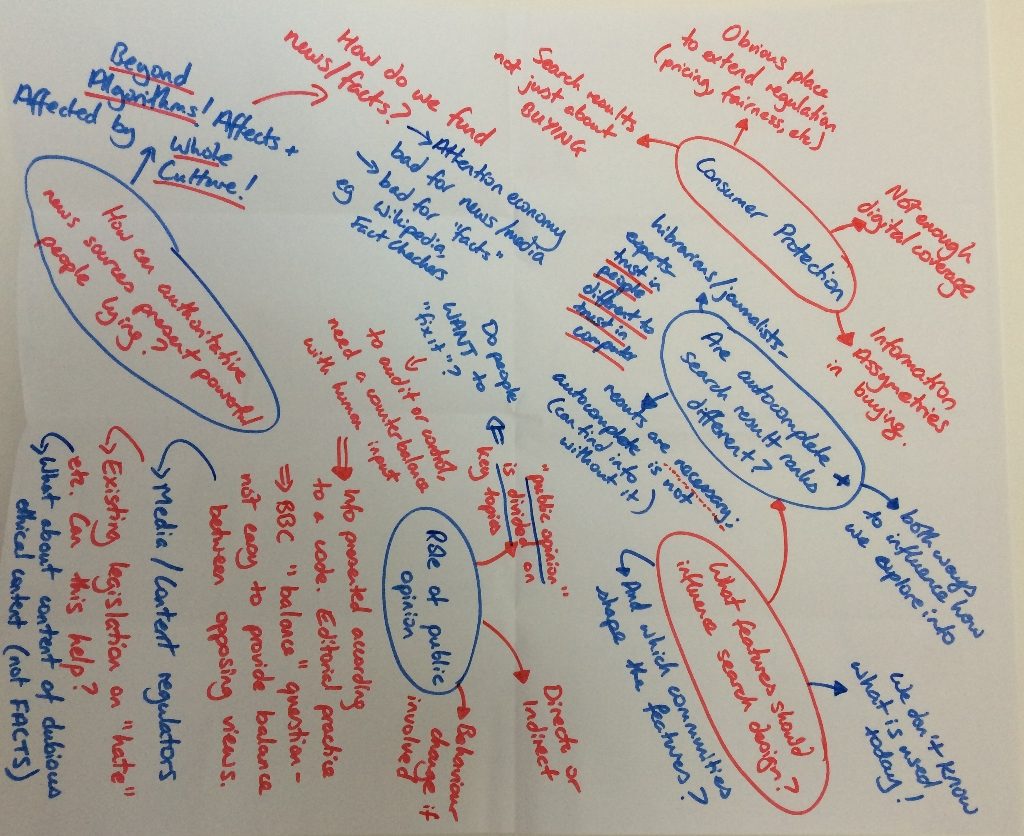

On February 3rd a group of twenty five stakeholders joined us at the Digital Catapult in London for our first discussion workshop.

The User Engagement workpackage of the project focuses on gathering together professionals from industry, academia, education, NGOs and research institutes in order to discuss societal and ethical issues surrounding the design, development and use of algorithms on the internet. We aim to create a space where these stakeholders can come together and discuss their various concerns and perspectives. This includes finding differences of opinion. For example, participants from industry often view algorithms as proprietary and commercially sensitive whereas those from NGOs frequently call for greater transparency in algorithmic design. It is important for us to draw out these kinds of varying perspectives and understand in detail the reasoning that lies behind them. Then, combined with the outcomes of the other project workpackages, we can identify points of resolution and produce outputs that seek to advance responsibility on algorithm driven internet platforms.

Continue reading First UnBias Multi-Stakeholder Workshop →

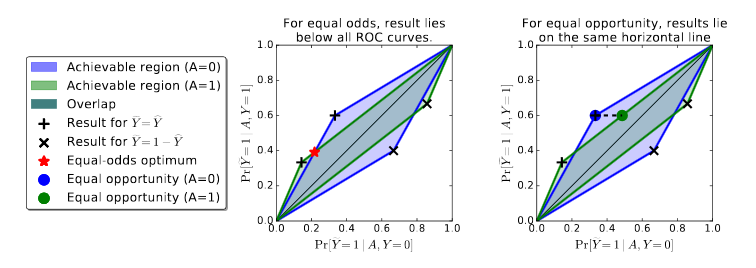

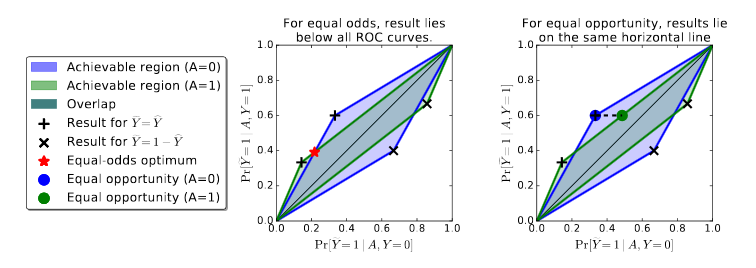

An important topic considered this year at the International Conference on Neural Information Processing Systems (NIPS), one of the prime outlets for machine learning and Artificial Intelligence research in the world, is the connection between machine learning, law and ethics. In particular, a paper presented by Moritz Hardt, Eric Price, and Nathan Srebro focused on Equality of Opportunity in Supervised Learning.

Continue reading Fair machine learning techniques and the problem of transparency →

A lot has been said about algorithms working as gatekeepers and making decisions on our behalf, often without us noticing it. I can surely find an example in my daily life where I do notice it and benefit from it. This happens when I use the “Discover Weekly” Spotify play-list. By comparing my listening habits to that of other users with similar but not identical choices, Spotify allows information on the fringes to be shared. It is thus “tailored” to my music taste, and it is incredibly accurate in predicting things I would like. Besides, it lets me discover new music and bands and in many occasions can also take me back in time with some tunes I have probably not listened to for a long time.

A lot has been said about algorithms working as gatekeepers and making decisions on our behalf, often without us noticing it. I can surely find an example in my daily life where I do notice it and benefit from it. This happens when I use the “Discover Weekly” Spotify play-list. By comparing my listening habits to that of other users with similar but not identical choices, Spotify allows information on the fringes to be shared. It is thus “tailored” to my music taste, and it is incredibly accurate in predicting things I would like. Besides, it lets me discover new music and bands and in many occasions can also take me back in time with some tunes I have probably not listened to for a long time.

Continue reading Algorithmic discrimination: are you IN or OUT? →

A lot has been said about algorithms working as gatekeepers and making decisions on our behalf, often without us noticing it. I can surely find an example in my daily life where I do notice it and benefit from it. This happens when I use the “Discover Weekly” Spotify play-list. By comparing my listening habits to that of other users with similar but not identical choices, Spotify allows information on the fringes to be shared. It is thus “tailored” to my music taste, and it is incredibly accurate in predicting things I would like. Besides, it lets me discover new music and bands and in many occasions can also take me back in time with some tunes I have probably not listened to for a long time.

A lot has been said about algorithms working as gatekeepers and making decisions on our behalf, often without us noticing it. I can surely find an example in my daily life where I do notice it and benefit from it. This happens when I use the “Discover Weekly” Spotify play-list. By comparing my listening habits to that of other users with similar but not identical choices, Spotify allows information on the fringes to be shared. It is thus “tailored” to my music taste, and it is incredibly accurate in predicting things I would like. Besides, it lets me discover new music and bands and in many occasions can also take me back in time with some tunes I have probably not listened to for a long time.