Aims of stakeholder workshops

Our UnBias stakeholder workshops bring together individuals from a range of professional backgrounds who are likely to have differing perspectives on issues of fairness in relation to algorithmic practices and algorithmic design. The workshops are opportunities to share perspectives and seek answers to key project questions such as:

- What constitutes a fair algorithm?

- What kinds of (legal and ethical) responsibilities do internet companies have to ensure their algorithms produce results that are fair and without bias?

- What factors might serve to enhance users’ awareness of, and trust in, the role of algorithms in their online experience?

- How might concepts of fairness be built into algorithmic design?

The workshop discussions will be summarised in written reports and will be used to inform other activities in the project. This includes the production of policy recommendations the development of a fairness toolkit consisting of three co-designed tools 1) a consciousness raising tool for young internet users to help them understand online environments; 2) an empowerment tool to help users navigate through online environments; 3) an empathy tool for online providers and other stakeholders to help them understand the concerns and rights of (young) internet users.

The case studies

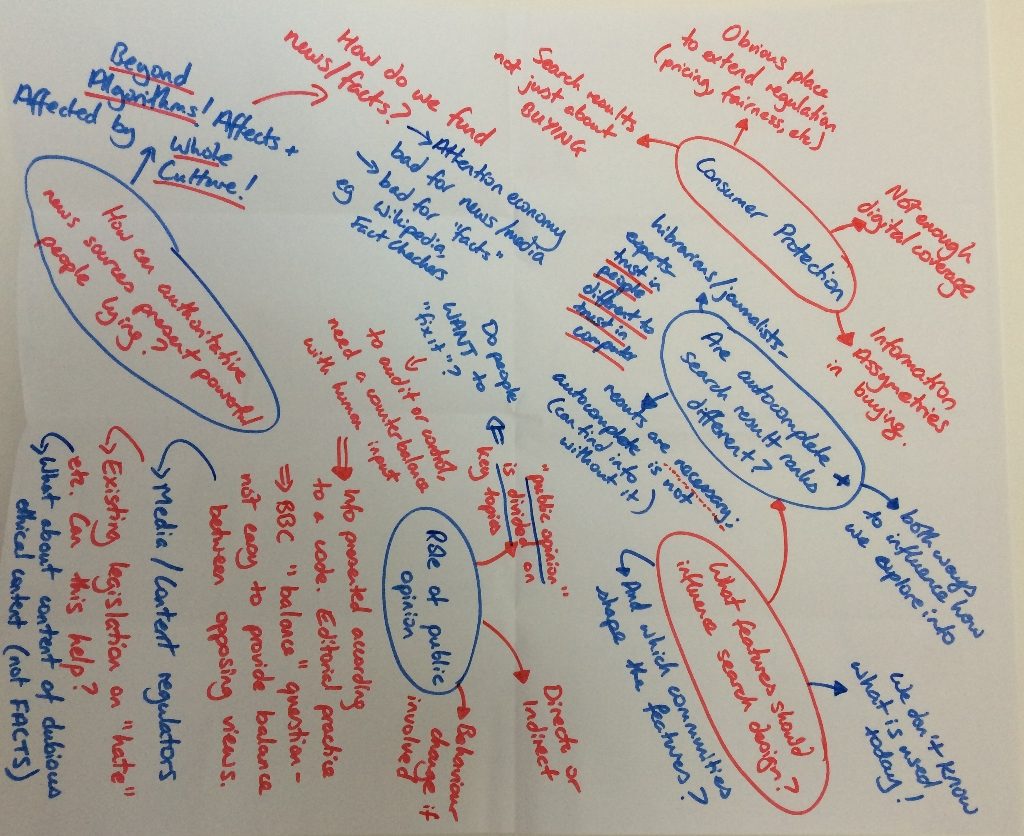

We have prepared four case studies concerning key current debates around algorithmic fairness. These relate to: 1) gaming the system – anti-Semitic autocomplete and search results; 2) news recommendation and fake news; 3) personalisation algorithms; 4) algorithmic transparency.

The case studies will help to frame discussion in the first stakeholder workshop on February 3rd 2017. Participants will be divided into four discussion groups with each group focusing on a particular case study and questions arising from it. There will then be an opportunity for open debate on these issues. You might like to read through the case studies in advance of the workshop and take a little time to reflect on the questions for consideration put forward at the end of each one. If you have a particular preference to discuss a certain case study in the workshop please let us know and we will do our best to assign you to that group.

Definitions:

To aid discussion we also suggest the following definitions for key terms:

Bias – unjustified and/or unintended deviation in the distribution of algorithm outputs, with respect to one, or more, of its parameter dimensions.

Discrimination (should relate to legal definitions re protected categories) – unequal treatment of persons on the basis of ‘protected characteristics’ such as age, sexual identity or orientation, marital status, pregnancy, disability, race (including colour, nationality, ethnic of national origin), religion (or lack of religion). Including situations where the ‘protected characteristics’ is indirectly inferred via proxy categories.

Fairness – a context dependent evaluation of the algorithm processes and/or outcomes against socio-cultural values. Typical examples might include evaluating: the disparity between best and worst outcomes; the sum-total of outcomes; worst case scenarios.

Transparency – the ability to see into the workings of the algorithm (and the relevant data) in order to know how the algorithm outputs are determined. This does not have to require publication of the source code, but might instead be more effectively achieved by a schematic diagram of the algorithm’s decision steps.

Workshop schedule:

- 9:45-10am Welcome/informal networking

- 10:00 – 10:30 Brief introduction to UnBias project & pre-workshop questionnaire completing

- 10:30 – 10:45 Coffee break / choosing of case-study discussion group

- 10:45 – 11:30 case-study discussion

- 11:30 – 11:45 Coffee break

- 11:45 – 13:00 Results from case study groups opened up for plenary discussion

- 13:00 – 13:30 Wrap up, open discussion and networking

Privacy/confidentiality and data protection

All the workshops will be audio recorded and transcribed. This in order to facilitate our analysis and ensure that we capture all the detail of what is discussed. We will remove or pseudonymise the names of participating individuals and organisations as well as other potentially identifying details. We will not reveal the identities of any participants (except at the workshops themselves) unless we are given explicit permission to do so. We will also ask all participants to observe the Chatham House rule – meaning that views expressed can be reported back elsewhere but that individual names and affiliations cannot.

We invite stakeholders from academia, education, government/regulatory oversight organizations, civil society, media, industry and entrepreneurs to contribute to our ongoing research study by taking part in a small number of stakeholder engagement workshops. These workshops will explore the implications of algorithm-mediated interactions on online platforms. They provide an opportunity for relevant stakeholders to put forward their perspectives and discuss the ways in which algorithms shape online behaviours, in particular in relation to access and the dissemination of information to users. The workshops will provide an excellent opportunity for participants to exchange ideas and explore solutions with perspectives from a wide range of stakeholders. Following each workshop the participants will receive an anonymized report of the outcomes, which will contribute to the production of policy recommendations as well as the design of a ‘fairness toolkit’ for users, online providers and other stakeholders.

We invite stakeholders from academia, education, government/regulatory oversight organizations, civil society, media, industry and entrepreneurs to contribute to our ongoing research study by taking part in a small number of stakeholder engagement workshops. These workshops will explore the implications of algorithm-mediated interactions on online platforms. They provide an opportunity for relevant stakeholders to put forward their perspectives and discuss the ways in which algorithms shape online behaviours, in particular in relation to access and the dissemination of information to users. The workshops will provide an excellent opportunity for participants to exchange ideas and explore solutions with perspectives from a wide range of stakeholders. Following each workshop the participants will receive an anonymized report of the outcomes, which will contribute to the production of policy recommendations as well as the design of a ‘fairness toolkit’ for users, online providers and other stakeholders.